Building the Foundation First: Sequencing Data Architecture Before AI in National Security

Even the 2025 National Security Strategy emphasizes strengthening America’s digital infrastructure and deploying AI responsibly, underscoring that success with DoW tech begins with a solid foundation.

National Security leaders and technologists often voice frustration at how long it takes to adopt new technology in the U.S. Department of War (DoW). Yet this slow pace has less to do with unwillingness and more to do with complexity. Integrating cutting-edge tools into military operations isn’t as simple as plug-and-play; we are building entire digital ecosystems that must withstand the stresses of real-world operations. Even the 2025 National Security Strategy emphasizes strengthening America’s digital infrastructure and deploying AI responsibly, underscoring that success with DoW tech begins with a solid foundation. The message is clear: to move faster, we must first get the sequence right, build the data and digital backbone, then layer on advanced AI and autonomous capabilities, all while ensuring new systems are resilient, explainable, and useful at scale.

The Challenge: Ecosystems, Not Apps

In a combat environment or any large military enterprise, new technology cannot exist in a vacuum. A fancy algorithm or gadget is useless if it can’t connect with legacy systems, comply with security requirements, and scale across global operations. This is why adopting technology in the DoW often feels harder and slower than in the commercial world. We’re not installing a single app; we’re upgrading an ecosystem. The ecosystem must handle far-flung networks, intermittent connectivity, classified environments, and cyber threats, all while under operational stress. It’s little wonder that rushing to field a “quick tech fix” often backfires. As one Army AI lead put it, “When it comes to AI, we think about the AI stack, it’s more than just an algorithm. How are the sensors, data management, algorithms, and human interaction all integrated?”. In the DoW, technology adoption demands a holistic approach from day one.

Case in point: At U.S. Special Operations Command Pacific (SOCPAC), early attempts to bring in AI started not with the AI itself, but with the data. The first step was simply making sense of what data we had, understanding what it was, where it lived, and how it related to other data. Only after mapping out the data landscape could we even think about applying advanced tools. This experience reflects a broader reality: if the data layer is a mess, any AI or autonomous system built on top will inherit that chaos. In other words, effective AI begins with effective data management.

Data First: Understanding and Organizing the Battlefield of Information

Before the DoW can successfully field AI-driven weapons systems or autonomous platforms, it must have its data house in order. This means taking inventory of data sources, cleaning and labeling datasets, and breaking down silos so that information flows where it’s needed. At SOCPAC, this “data triage” was step one, a painstaking but crucial effort to build a common picture of our information holdings. Special Operations and Army leaders have consistently noted that data standards and governance are the first step toward AI excellence. In the drive toward initiatives like Joint All-Domain Command-and-Control (JADC2), they recognize that “AI will be a warfighter enabler” only if we take a “holistic approach” to data upfront.

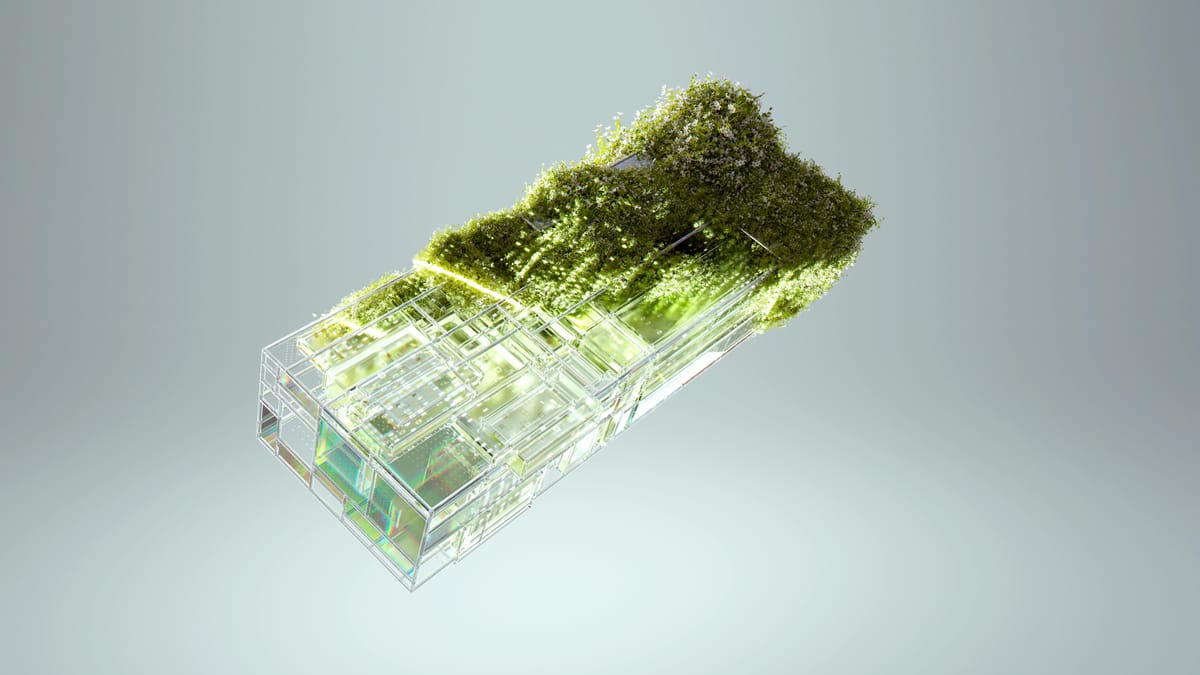

Putting data first entails establishing a “quality data” foundation, the base of the pyramid on which all analytics and AI rest. The Pentagon’s Chief Digital and AI Office (CDAO) frames this as a hierarchy of needs: at the bottom is high-quality, accessible data; at the top is the effective, responsible AI that data enables. In practical terms, this means investing in data cataloging, metadata standards, cloud data lakes or warehouses, and governance rules to ensure data can be shared securely but widely. Indeed, a SOCOM official described his focus as “architecting solutions that facilitate [AI] versus prescribing one piece of software,” stressing that the goal is to enable units across the enterprise to leverage data and create responsible AI rather than dropping in a single tool. This enabling approach ensures the right data is available in the right format for any future AI application.

Building the Digital Backbone: From Data to Deployment

Once an organization understands its data, the next step is defining and executing a foundational data stack. This is essentially the digital backbone, the cloud services, networks, platforms, and pipelines that move data from where it’s generated to where it’s needed. In practice, this meant that at SOCPAC, we had to stand up core data infrastructure: data integration platforms, visualization tools for situational awareness, and initial automation of data processing using AI. Only by visualizing our data and automating workflows could we expose gaps and frictions in how information moves. As we advanced, we confronted hard technical problems of data transport across formats and classifications, moving information between text databases, geospatial maps, video feeds, and even between different security domains. Issues like multi-modal data fusion, limited bandwidth (packet size), and edge device memory constraints are not small challenges; they are often the rate-limiters in deploying AI for warfighters.

Building this backbone goes hand-in-hand with modernizing the underlying IT infrastructure. Cloud computing and robust networks are critical. Many National Security organizations are adopting a hybrid cloud approach, using a mix of on-premises servers and commercial cloud, to ensure global, resilient access to data. For globally dispersed units, “it’s almost mandatory to leverage a hybrid [cloud] solution to architect the pipelines” that move data from the field to where it can be processed and back. America’s AI Action Plan released in 2025 zeroes in on these needs. It calls for major investments in computing power, cloud capacity, and modern data architecture to support AI at scale. In fact, the plan’s second pillar explicitly focuses on building AI infrastructure: accelerating the construction of data centers, modernizing the energy grid to power AI, and expanding high-performance computing capabilities. The message from the top is that without sufficient compute and connectivity, even the most advanced algorithms will falter. The Action Plan’s emphasis on “Building AI Infrastructure” confirms that cloud and data stack modernization are seen as prerequisites for AI dominance.

Key steps in establishing the digital backbone include:

- Data Integration and Cleaning: Aggregate data from legacy systems, sensors, and databases into a unified environment. Apply data cleaning and labeling so AI can interpret the information.

- Core Infrastructure Build-Out: Deploy scalable data storage and cloud computing resources (with appropriate security) to handle the volume and velocity of data. This may involve new data centers or leveraging commercial cloud providers (CSPs) with secure government regions.

- Tools for Visualization and Automation: Introduce platforms for data visualization (to allow analysts and commanders to see trends) and automation tools, including basic AI/ML models, to handle repetitive data processing tasks.

- Address Data Transfer Bottlenecks: Engineer solutions for moving data across domains and formats; for example, enabling classified networks to pull updates from unclassified sensors, or converting video feeds into text alerts. This often requires custom data pipelines, compression algorithms, and edge computing so that forward-deployed units aren’t cut off from insights.

Each of these steps builds on the previous. Skipping ahead to flashy AI applications without these elements in place is a recipe for disappointment. As the CDAO notes, their mission includes delivering “enterprise-level infrastructure and services” and scaling digital solutions, so that individual applications can plug into an ecosystem that is technically sound, secure, and interoperable by design.

Layering Advanced Capabilities on a Solid Base

With a robust data architecture in place, one that has visibility into data, handles the flows, and automates basic tasks, the stage is set to introduce more advanced technologies. This is where things like AI-driven decision support, autonomous platforms, and smart weapon systems can enter. But importantly, they are layered on top of the foundation, not dropped in as standalone solutions. A new algorithm for target recognition can be integrated into the common data platform so that its outputs feed directly into commanders’ dashboards. An autonomous drone can be fielded knowing that it can tap into the cloud data repository for the latest maps or intelligence updates.

At each step of this layering, the focus should be on foundational technologies that everything else builds on. In a National Security context, these include big data analytics tools, cloud services, and AI development frameworks, essentially the digital “soil” from which specific applications grow. Bringing in a cutting-edge AI model from a frontier lab or a promising autonomous vehicle startup makes sense only after the data backbone and compute environment can support it. The foundation must be able to supply high-quality training data, accommodate the model’s computational needs (e.g., GPU horsepower), and ingest the model’s outputs back into the operational picture. This deliberate sequencing is how we avoid the common trap of exciting technology that sits on the shelf unused. A great capability introduced too early, before the units know how to use it or before it can plug into workflows, will end up sidelined. We have seen it happen: a unit procures sophisticated analytic software, but with no integration plan, the tool becomes an orphan system that few troops trust or know how to operate.

The approach of layering also aligns with national guidance on responsible tech adoption. The 2025 National Security Strategy and other National Security strategies highlight that deploying AI and autonomous systems must be done in a responsible, secure, and phased manner. The goal is to accelerate adoption without creating brittle or opaque systems. Done right, each new capability builds cumulatively on the digital ecosystem. For example, once an AI tool for data fusion is proven, it paves the way for an autonomous platform that relies on that fused data. This progressive enhancement ensures that by the time we reach truly advanced tech, say swarming drones with AI, they are supported by mature data feeds, cloud connectivity, and compute power to function reliably at scale.

Why Procurement Often Feels “Broken”

If all of the above sounds logical, why does the acquisition system so frequently deliver point solutions out of sequence? One reason is misaligned requirements. Too often, program offices write requirements and issue contracts for a specific “shiny object,” perhaps an AI-enabled analytics software or an autonomous vehicle, without explaining or funding the prerequisite steps. The foundation isn’t ready, but the contract is awarded anyway. The result is predictable: even great technology ends up underutilized or shelved because it cannot connect with anything else or fails to solve an immediate operational problem. A Pentagon study in 2023 noted that overly complex requirements and slow processes have left the DoW struggling to field capabilities that keep pace with threats. In many cases, requirements do “not always align to operational needs or keep pace with the rapid advancement of technology”. In plain terms, we ask for the wrong thing, at the wrong time.

Furthermore, the traditional DoW acquisition system rewards caution and thoroughness, which is good for safety, but bad for speed. It “stifles innovation” by rewarding process over outcome. Vendors often find themselves trying to sell a cutting-edge solution into an enterprise that hasn’t laid the groundwork to absorb it. From the government side, without a clear technology roadmap, procurement officials may default to buying a known widget (“we need an AI system that does X”) rather than investing in less tangible infrastructure (like data architecture or cloud services) that enable many systems. It’s easier to justify a budget line for a specific tool than for a nebulous “digital backbone.” This incentive mismatch leads to fragmented, unsequenced tech integration across programs.

Voices in the National Security tech community are highlighting these issues. Innovative acquisition leaders and even Congress have begun pushing for reforms to encourage more flexible, staged adoption of tech. For instance, the DoW’s CDAO has promoted an “Open AI Framework” concept, urging programs to follow a roadmap where data and dev/test platforms come first, and specific applications follow. Special Operations leaders have similarly stressed that a culture shift is needed, focusing on data and architecture before tools. “Leveraging data efficiently and effectively is the heart of AI,” one Army Special Ops officer noted, and getting there requires a cultural and structural change in how we plan projects. The bottom line is that procurement feels broken when it is not aligned with the technology maturation sequence. Without the right roadmap, money gets spent with little to show for it.

Testing, Evaluation, and Iterative Experimentation

One encouraging development is the DoW’s growing emphasis on test and evaluation (T&E) and experimentation as part of tech integration. If we want to be deliberate about sequencing and also move fast, we need feedback loops, chances to test new tools in realistic settings, evaluate performance, and fix flaws before full deployment. Organizations like the CDAO and initiatives like the Rapid Defense Experimentation Reserve are giving units an opportunity to try emerging technologies on a small scale. For example, Special Operations Command has hosted “agentic AI” experimentation events to let developers and operators assess AI tools together in field conditions. These events surface the dependencies and groundwork needed for AI tools (often revealing, say, that a new algorithm needs better labeled data or a faster network; i.e., back to the foundation).

The CDAO has also partnered with industry to build rigorous testing frameworks. A notable effort is a partnership with Scale AI to develop a comprehensive T&E framework for the responsible use of large language models in the military. The aim is to create benchmark tests and evaluation datasets tailored to DoW use cases, ensuring that when generative AI systems are introduced, their performance and reliability have been vetted in context. As Scale’s CEO remarked, “Testing and evaluating generative AI will help the DoW understand the strengths and limitations of the technology, so it can be deployed responsibly.” This push for T&E is directly addressing the “explainability” and “resiliency” concerns. By measuring how AI models behave, their accuracy, biases, and failure modes, the DoW can gain confidence that new AI capabilities won’t behave unpredictably when it counts. It’s essentially building trust through testing.

We see similar efforts with the Pentagon’s new AI Rapid Capabilities Cell (AI RCC), launched by CDAO and the Defense Innovation Unit (DIU)in late 2024. Its charter is to pilot next-generation AI (like advanced generative AI models) in key use cases and do so in a way that “incorporates the standards, policy, and requirements from the beginning”. Importantly, the AI RCC is resourced not just to buy tools, but to invest in foundational AI infrastructure and tools that support those pilots. In other words, even the rapid pilots will spend significant effort on the enabling layers (data, cloud, security, policy) alongside the flashy new AI application. This is exactly the kind of deliberate sequencing we’ve been talking about, and it’s being validated in real-world National Security innovation efforts. The CDAO’s approach is to “meet DoW users where they are in their digital journeys” and help them progress from prototypes to scaled solutions by providing that enterprise platform and expertise.

The Way Forward: Deliberate Roadmapping and System Design

All these lessons point to one overarching mandate: be deliberate about sequencing technology adoption. Build the foundation first, align it with operational needs, and only then bring in the high-end capabilities. By following this progression, we can dramatically increase the speed of adoption and ensure that new systems are reliable and explainable. It’s not a call to slow down innovation;and it’s a call to pave the road so innovation can sprint. As a Department, this means creating clear roadmaps that lay out the steps (data, infrastructure, basic analytics, advanced AI, etc.) and synchronizing requirements and budgets to that sequence. It also means educating both our acquisition workforce and our operational leaders on why the early, less glamorous work (like data governance or cloud refactoring) is essential to warfighting outcomes. When a spend plan comes in asking for an AI widget with no funding for data architecture or training, that should raise red flags.

There are encouraging signs: the 2025 National Security Strategy explicitly ties emerging tech adoption to upgrading digital infrastructure and urges responsible AI deployment that is thoroughly tested and trusted. In the same vein, the National Security Strategy and America’s AI Action Plan are prioritizing “digital backbone” investments, from cloud computing to forward-deployed data networks, as key enablers of AI and autonomy. These high-level strategies are essentially telling program managers and the ;industry: focus on the fundamentals first. If we heed that advice, the payoff will be huge. We’ll field new capabilities faster, because we won’t be constantly reworking basics or trying to force incompatible tech to work together. And importantly, our AI and autonomous systems will be more resilient and explainable. They’ll fail less often, and when they do fail, we’ll understand why (thanks to built-in test harnesses and transparent data flows).

Conclusion

The future of U.S. National Security technology lies in smart sequencing and integration. We must resist the temptation of quick wins that skip steps. Instead, we should double down on deliberate roadmapping and system design. Build the data foundations, modernize the compute and cloud infrastructure, and foster a culture of continuous testing and learning. With this approach, when advanced AI and autonomous systems do arrive on the battlefield, they will plug into a robust ecosystem ready to amplify their impact. The call to action for National Security tech professionals, engineers, acquisition officials, and national security leaders alikethe is to champion this disciplined approach. Insist on the roadmap that goes from foundation to frontier. Ask the tough questions early: “Is our data ready? Do we have the cloud and compute in place? How will we test and maintain this at scale?” By sequencing our tech integration deliberately, we can accelerate adoption and ensure our systems remain resilient, secure, and effective when it matters most. In short, first build the foundation, and the rest will follow.

Comments ()