Building the War Cloud: Why the Department of War Needs Modern AI Infrastructure

This modern “AI stack” is not just a tech upgrade; it’s the foundation on which large language models (LLMs), autonomous systems, and computer vision (CV) models will operate effectively, even in contested or disconnected edge environments.

The United States is racing to harness artificial intelligence (AI) as a cornerstone of military power, but the Department of War’s traditional IT infrastructure is holding it back. Legacy systems built for an earlier era struggle to support the data-hungry, GPU-intensive workloads that modern AI demands. To field advanced AI capabilities at mission scale, the DoW must embrace the same infrastructure best practices that drive Silicon Valley: hybrid multi-cloud architectures, GPU-dense supercomputing clusters, and multi-cluster Kubernetes orchestration.

This modern “AI stack” is not just a tech upgrade; it’s the foundation on which large language models (LLMs), autonomous systems, and computer vision (CV) models will operate effectively, even in contested or disconnected edge environments. Below, we explore why yesterday’s infrastructure won’t win tomorrow’s wars, what a modern AI stack looks like, and how adopting commercial best practices across secure multi-cloud environments will empower the DoW’s AI vision.

Legacy Infrastructure: A Roadblock for AI

The DoW’s existing infrastructure was never designed with AI in mind. Traditional military networks and data centers often rely on aging hardware, CPU-centric servers, and siloed on-premise systems. These setups can handle routine data processing or enterprise IT tasks, but AI workloads are a different beast. Training or deploying cutting-edge models like LLMs involves crunching massive datasets with billions of parameters, which requires parallel processing power only available via specialized accelerators (GPUs, TPUs) in great numbers. Legacy DoW systems simply lack the sheer compute density and high-performance networking needed. In fact, AI’s compute and energy demands are so unprecedented that it’s been called “the first digital service in modern life” to challenge America’s capacity for power generation. That is a stark warning: without serious upgrades, the status quo DoW infrastructure will be a bottleneck that starves AI projects of the fuel (computing power and data throughput) they need to run.

Moreover, traditional acquisition and deployment models in the defense realm have emphasized security and stability over speed and scalability. The result is often closed, bespoke systems that are hard to scale out or modify. But AI technology is evolving at breakneck speed; new models, frameworks, and tools emerge monthly. If the DoW can’t readily integrate new hardware or distribute workloads, it will fall behind. In the commercial world, companies long ago learned that rigid, on-premise servers would not suffice for AI; cloud providers and tech firms built flexible, scalable architectures to accommodate AI growth. The DoW must do the same or risk its AI initiatives stalling on outdated gear.

Finally, network constraints and isolated data centers make it hard to push AI to the tactical edge. Current systems often assume constant connectivity to a central server or rely on base-centric computing. But in real-world missions, warfighters operate in austere environments where connectivity is intermittent and local compute is limited. A Predator drone or a naval vessel cannot wait for a distant data center to process an image or update an AI model during a mission. Without on-site AI computing capability, the effectiveness of AI in contested or disconnected scenarios plummets. Traditional infrastructure isn’t built for this forward deployment of compute, but a modern AI stack can be.

What a Modern AI Stack Looks Like

So, what exactly do we mean by a “modern AI stack”? In essence, it’s the kind of architecture pioneered by leading cloud and AI companies, composed of flexible cloud services, powerful GPU hardware, and containerized software managed at scale. Key elements include:

- Hybrid Multi-Cloud Architecture: Rather than relying on a single on-premise server farm or one cloud vendor, a hybrid multi-cloud approach uses a mix of on-premises data centers and multiple cloud providers (e.g., AWS, Azure, Google, Oracle) to ensure both agility and resilience. Workloads can move or burst between environments; for example, tapping commercial cloud GPUs for extra capacity during large training runs, while keeping sensitive data on secure on-prem infrastructure. This diversity prevents lock-in and offers fail-safes; if one environment is compromised or unavailable, operations continue elsewhere. The DoD’s Joint Warfighting Cloud Capability (JWCC) program embraces exactly this approach, enabling direct use of commercial cloud services at all classification levels, from unclassified to Top Secret, from headquarters data centers to tactical edge deployments. In other words, multi-cloud is becoming the default for mission IT, providing global reach and redundancy.

- GPU-Dense Compute Clusters: Modern AI runs on GPUs, thousands of them. Tech companies build GPU superclusters (sometimes with tens of thousands of accelerators) to train frontier models. These clusters provide the high parallelism and memory bandwidth AI needs. For the DoW, this means investing in GPU-dense servers and high-performance computing nodes in its data centers, akin to the systems powering OpenAI or Google’s AI labs. Oracle, for instance, touts delivering “mission-ready AI infrastructure through GPU superclusters and bare-metal high performance computing” to support model training, autonomous systems, and AI inference even in classified settings. Such clusters can crunch intelligence data, train CV algorithms on drone footage, or run complex simulations that legacy CPUs would take ages to complete. The bottom line: without ample GPUs, the DoW cannot hope to develop or field state-of-the-art AI models.

- Multi-Cluster Kubernetes Orchestration: Kubernetes, the open-source system for automating deployment and management of containerized applications, is a linchpin of modern cloud operations. In a state-of-the-art AI stack, you don’t manually install software on each server; you package AI models and services into containers and let Kubernetes deploy and scale them across a cluster (or across multiple clusters). Multi-cluster Kubernetes means having separate clusters (say one per cloud or per on-prem site) that can be managed in concert. This setup brings several advantages crucial for the DoW: consistency, scalability, and portability. Developers can define an AI application once (for example, a container with an image recognition model and an API) and run it anywhere, on a jet, on a tank, in a cloud region, or in a classified lab, with consistent behavior. Kubernetes will handle finding available resources, restarting instances if they fail, and scaling out replicas under heavy load. Critically, Kubernetes is built with resilience in mind; it can run across hybrid environments and recover from node failures, which is invaluable in wartime scenarios. By adopting containers and Kubernetes, the DoW can iterate faster on AI capabilities and ensure new models quickly reach the field. Commercial firms have used these tools for years to deploy everything from web services to AI microservices globally, and those lessons are directly applicable to defense.

- Open-Source and Interoperable Ecosystems: Another hallmark of the modern AI stack is leveraging open-source software and common frameworks, from AI libraries (TensorFlow, PyTorch) to infrastructure as code. The White House’s AI Action Plan even calls for “open-source and open-weight” AI models to drive innovation. Embracing open ecosystems means the DoW can stand on the shoulders of the tech community rather than reinventing the wheel. It also ensures interoperability, important if different branches or allies need to collaborate on the same systems. An open, standards-based approach (using open APIs, file formats, etc.) will let DoW AI systems plug into allied networks or quickly swap components without massive rework. For example, Oracle’s cloud pitch for JWCC highlights multicloud interoperability via open standards, APIs, and portable workloads, with DevSecOps pipelines for both open-source and proprietary models. In practice, this means a DoW data scientist could develop a model using popular tools and deploy it on any approved cloud or edge device without compatibility issues. The flexibility to innovate rapidly, unencumbered by proprietary lock-in or archaic standards, is a major best practice the DoW should adopt.

In summary, a modern AI stack resembles a nervous system that spans clouds and edges, automatically allocating GPU-rich computing wherever needed, and running AI software in portable containers for maximum agility. This is the architecture powering breakthroughs in the private sector, and it’s what the DoW must internalize to unlock AI at scale.

Replicating the Architecture Across Secure Clouds

A key challenge for the Department of War is that it operates in a much more sensitive and adversarial context than a typical company. Military AI workloads often involve classified data (intelligence feeds, operational plans) and must function under an active cyber threat. So how can the DoW adopt a Silicon Valley-style infrastructure while maintaining extreme security and reliability? The answer is to replicate the modern AI architecture across secure, air-gapped, and multi-cloud environments, essentially, build “the war cloud” as a federated system.

Practically, this means establishing high-security versions of cloud and cluster infrastructure in DoW-controlled spaces. The America’s AI Action Plan recognizes this need, calling for “high-security data centers for military and Intelligence Community usage” where AI models on sensitive data can be deployed with resilience against nation-state attacks. The DoW should design these data centers to mirror the best of commercial hyperscalers: filled with GPU servers, container orchestration, and automation, but isolated and hardened. Think of it as creating mini-Azure or AWS regions for Top Secret/SAP networks. In fact, cloud vendors are already partnering on this: Oracle’s National Security Regions and services like AWS GovCloud offer fully accredited clouds at IL6/SAP levels, featuring the same kind of GPU horsepower and tools available in commercial regions. By leveraging such offerings and layering DoW-specific security controls, the department can stand up multiple secure cloud environments that all adhere to a common architecture blueprint.

Multi-cloud replication is crucial for resilience. If the DoW builds all its AI capability in only one environment, it becomes a juicy single point of failure, whether due to cyber attack, outage, or even bureaucratic hiccups. A warfighter in the field doesn’t care whether the AI backup server is run by Amazon, Microsoft, or Oracle, they just need it to work when the primary goes down. By spreading AI workloads across multiple clouds and on-prem nodes, the DoW can ensure continuity. This also aligns with zero-trust principles and robust cyber defense: a diverse, distributed infrastructure is harder for an adversary to completely disable. As a bonus, multi-cloud competition can drive cost efficiency, something not lost on policymakers. The National Security Strategy emphasizes maintaining our edge in technology while being prudent with resources; a multi-vendor cloud strategy encourages both innovation and cost discipline, as providers vie to offer better AI services (from cheaper GPUs to specialized AI chips).

Another dimension is geographic and network spread. AI infrastructure must span from core data centers all the way to forward operating bases and platforms. The JWCC’s mandate explicitly includes support for “mission readiness from headquarters to the tactical edge”, meaning any cloud solution must extend its reach to war zones, ships at sea, and beyond. This could involve portable or modular data centers that bring cloud capabilities to the unit. In a recent naval exercise, for instance, a private company deployed a modular AI data center (“Galleon”) on a Navy warship, providing high-performance compute and networking in a contested maritime environment. This created a secure hybrid cloud at sea, processing data from autonomous systems in a bandwidth- and power-constrained setting. Such examples showcase the art of the possible, a “cloud in a box” that travels with forces, yet links back to larger cloud ecosystems when communications permit. The DoW should cultivate multiple flavors of these deployable AI hubs, each built on the same core stack (containerized apps orchestrated over GPUs), whether it’s a fixed, hardened data center in Colorado or a ruggedized server rack flown into an FOB in Africa.

Security, of course, remains paramount. Modern cloud infrastructure actually offers improvements here if done right: fine-grained identity management, constant software patching, and uniform configurations can reduce the human errors that plague bespoke systems. The DoW can incorporate defensive measures at every layer, from encryption of data in transit and at rest, to strict role-based access, to continuous monitoring of model behavior. Adopting best practices means adopting best-in-class security practices as well, many of which are built into contemporary cloud platforms.

However, with great power comes new vulnerabilities: a misconfigured Kubernetes cluster or a compromised software supply chain can jeopardize many systems at once. A recent security analysis highlighted how “overly permissive orchestration settings” or weak update pipelines could let attackers gain broad control of GPU clusters; one bad container image could “cascade through many workloads” if not checked. This is a sober reminder that the DoW’s modern AI stack must bake in rigorous DevSecOps. By mirroring commercial and cybersecurity best practices, the department can enjoy the benefits of scale without opening the floodgates to adversaries. In short, security by design must underpin every cloud region, cluster, and application in the war cloud.

From Cloud to the Edge: AI at Mission Scale

Ultimately, the drive for a modern AI infrastructure is in service of operational capabilities. What do we gain when the Department of War has this foundation in place? We gain the ability to deploy advanced AI, large language models, autonomous decision agents, and the real-time CV analytics, wherever the mission demands, with confidence in their performance and reliability. This is essential if such AI systems are to be more than tech demos and actually become war-winning tools.

Consider Large Language Models and AI assistants (“LLM agents”) for intelligence analysis or command decision support. These models (think GPT-4 class or custom DoD-trained models) are enormously compute-intensive. Running them in a timely fashion might require a dozen or more high-end GPUs just for inference on one user’s query, not to mention the training or fine-tuning process. Under a legacy setup, an analyst in the theater might not have access to that kind of computational muscle. But with a modern hybrid cloud, that analyst can tap into a pool of GPUs via an edge deployment or satellite link, getting answers from an AI model that lives partly in a regional cloud and partly on local servers. If the connection cuts out, an edge-local model (maybe a distilled, smaller version of the LLM) could take over, ensuring continuity, all orchestrated by the underlying platform. This seamless handoff between cloud and edge is only possible with the Kubernetes-driven, multi-cloud fabric we described. Without it, the DoW would face a hard choice: run only tiny models locally (limiting capability), or risk relying on reach-back to a distant data center (risking downtime). A well-architected war cloud mitigates that dilemma by doing both.

We see similar needs with autonomous systems, like drones, UUVs, or robotic vehicles. These platforms generate tons of sensor data and need AI to make split-second decisions (e.g., target recognition, navigation). They benefit from cloud training (to continuously improve their models with aggregated data) but must operate autonomously when cut off. A modern infrastructure allows for continuous integration of AI models: new algorithms trained on the cloud can be packaged into containers and pushed out to deployed robots through automated pipelines, even over low-bandwidth links.

Conversely, data collected at the edge can sync back to the cloud when possible, enabling retraining or analysis in rear hubs. This vision, often called “AI at the edge,” is already embraced by industry. It’s estimated that by 2028, edge computing spending will reach $380B, driven in large part by AI needs in low-connectivity scenarios. The DoW’s mission scenarios (contested communications, electronic warfare, etc.) are exactly those that demand robust edge AI. Only by having cloud-grade infrastructure replicated in micro at the tactical edge can we run, say, a computer vision model on a drone swarm in the jungle with the same efficacy as in a stateside lab.

Computer vision and ISR (Intelligence, Surveillance, Reconnaissance) is another killer app. Whether it’s processing satellite imagery or full-motion video from a Reaper drone, AI can vastly speed up target detection and threat analysis. But that requires distributing the processing intelligently. In a modern AI stack, one could deploy a CV inference service across a fleet of edge nodes (on aircraft or ground stations) using Kubernetes, while a central cloud cluster handles periodic model updates or more complex queries. This distributed approach was demonstrated in the UNITAS exercise, where the aforementioned modular data centers processed live drone feeds using what was termed the “American AI Stack”. The result: warfighters got timely insights despite being in a “communications-denied” environment, because the computation was happening right there with them. It’s a prime example of how the right infrastructure enables AI to function when and where it’s needed, not just in pristine lab conditions.

One more aspect to highlight is testing and evaluation (T&E). The DoW and Chief Digital and AI Office (CDAO) have been actively benchmarking AI models to ensure they meet mission requirements. These evaluations, whether on the truthfulness of LLMs or the reliability of AI in combat simulations, generate valuable data on model performance. But to act on that data (e.g., fine-tune a model or swap it out), the underlying infrastructure must support rapid iteration. A containerized AI pipeline can move a model from test to staging to production with minimal friction, leveraging the same cloud resources. For example, a test might reveal that a certain CV model is underperforming in identifying camouflaged vehicles.

With a robust AI platform, engineers can quickly train a better model (using, say, additional synthetic data), validate it, and then deploy it via the cloud to all relevant edge devices within hours. The alternative in a legacy setup might take weeks or months to roll out an updated algorithm across various standalone systems. In the fast-paced threat environment, that agility can literally be life-saving. The AI Action Plan calls for an “AI & Autonomous Systems Virtual Proving Ground,” essentially a simulation and test environment, which would rely on heavy compute and cloud-based sharing of results. Here again, the technologies of the modern AI stack (scalable compute on demand, common platforms for sharing models) are enablers for policy directives.

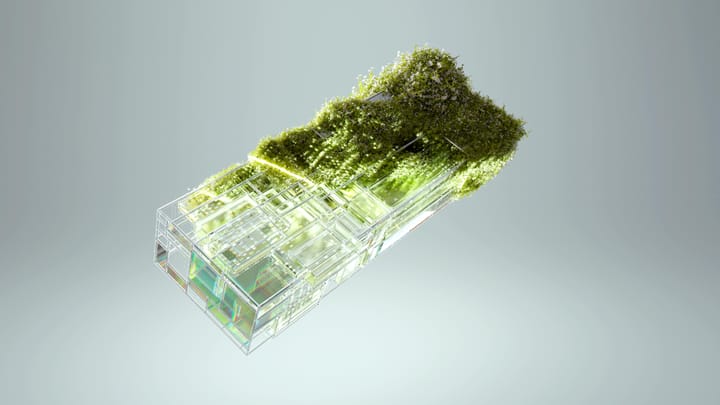

An example of modern AI infrastructure at the tactical edge: a modular, GPU-powered data center being deployed on a Navy ship during a contested environment exercise. Such “cloud-in-a-box” solutions delivered high-performance computing and networking on-site, allowing warfighters to run AI workloads even with limited connectivity. This demonstrates how hybrid cloud architecture can extend from centralized facilities all the way to mobile, forward-deployed units.

Conclusion: Foundation for the Future Fight

AI is poised to transform warfare, but it’s only as effective as the infrastructure it runs on. The Department of War cannot rely on yesterday’s tech foundation to support tomorrow’s autonomous drones, AI battle managers, or intelligent cyber defenses. We need the kind of foundation that America’s top tech firms have built for themselves: one that is scalable, resilient, and ubiquitous. By adopting a hybrid multi-cloud strategy, investing in GPU-rich compute clusters, and orchestrating everything with technologies like Kubernetes, the DoW will position itself to leverage AI as a true force multiplier. This isn’t a blind leap into unproven tech; it’s taking the proven best practices from industry and applying them to the most critical mission of all: national security.

Leaders across defense and policy circles are starting to recognize this imperative. The 2025 National Security Strategy emphasizes that America’s advanced technology sector “provides a qualitative edge to our military”, and maintaining that edge means out-innovating adversaries in AI. Similarly, America’s AI Action Plan underscores building out national AI infrastructure (from power grids to secure data centers) as a pillar of competitiveness. The DoW’s task is to translate these strategic calls into concrete capabilities; essentially, to build an AI-ready warfighting network that mirrors the flexibility and scale of the commercial cloud, yet fortified for battle.

The payoff will be significant. With a modern AI stack in place, future LLM-based advisors could help commanders sift intelligence in seconds, autonomous systems could operate farther and more reliably in denied environments, and mission planners could spin up vast simulations to rehearse operations with AI-driven adversary forces. All of this becomes feasible when the infrastructure ceases to be the limiting factor. In the past, the military led in technologies like GPS and networking; in the cloud-era AI, it must be humble enough to learn from outside and leapfrog where it can. By forging its own “war cloud,” a secure, distributed, AI-optimized infrastructure, the Department of War can ensure that our cutting-edge algorithms and models have a worthy home, from the cloud to the tactical edge. In the high-stakes competition for AI advantage, building this foundation is not just IT strategy, but strategic necessity. The warfighters of tomorrow deserve nothing less.

Comments ()